Virtual Objects Look Farther on the Sides : The Anisotropy of Distance Perception in Virtual Reality

Abstract

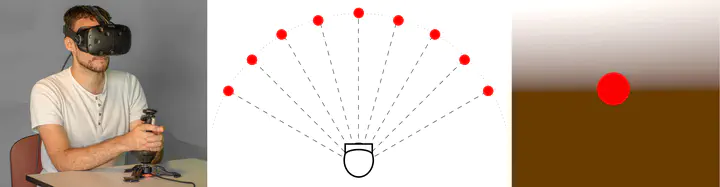

The topic of distance perception has been widely investigated in Virtual Reality (VR). However, the vast majority of previous workmainly focused on distance perception of objects placed in frontof the observer. Then, what happens when the observer looks onthe side? In this paper, we study differences in distance estimationwhen comparing objects placed in front of the observer with objectsplaced on his side. Through a series of four experiments (n=85),we assessed participants’ distance estimation and ruled out poten-tial biases. In particular, we considered the placement of visualstimuli in the field of view, users’ exploration behavior as well asthe presence of depth cues. For all experiments a two-alternativeforced choice (2AFC) standardized psychophysical protocol wasemployed, in which the main task was to determine the stimuli thatseemed to be the farthest one. In summary, our results showed thatthe orientation of virtual stimuli with respect to the user introducesa distance perception bias: objects placed on the sides are system-atically perceived farther away than objects in front. In addition,we could observe that this bias increases along with the angle, andappears to be independent of both the position of the object in thefield of view as well as the quality of the virtual scene. This worksheds a new light on one of the specificities of VR environmentsregarding the wider subject of visual space theory. Our study pavesthe way for future experiments evaluating the anisotropy of distanceperception in real and virtual environments.